The “Freest Writer” in Stalin’s Russia Laurence Sterne, the eighteenth-century author of Tristram Shandy and A Sentimental Journey, might seem an unlikely figure to capture the imagination of early Soviet intellectuals in the 1920s and 1930s. The Bolshevik Revolution dismantled the cultural institutions of the old regime, displaced much of the pre-revolutionary intelligentsia, and set out to create a new literary canon for a new Soviet reader. From the outset, literature was subject to political control.By the 1930s, the state increasingly defined a canon of approved literary classics, while the newly-established doctrine of Socialist Realism began to dominate official literary institutions. What place could there be, in such a system, for an eccentric Yorkshire clergyman whose popularity in Russia had peaked more than a century earlier, at the turn of the nineteenth century? And yet, in the two decades following the 1917 Revolution, Sterne’s name began to appear with notable frequency in lecture halls, private correspondence, diaries, and unpublished manuscripts. Laurence Sterne and His Readers in Early Soviet Russia: The Secret Order of Shandeans traces Sterne’s reappearance in early Soviet culture. Drawing on letters, diaries, translation drafts, marginal notes, illustrations, and editorial correspondences, the book reconstructs how Soviet readers encountered Sterne and what they sought in his writing. In mid-1920s Leningrad, an undergraduate student Edvarda Kucherova wrote to a friend: “You cannot imagine how much I adore Sterne. In a very personal way and with such gratitude, for he helps me live. Thanks to him, it is so clear that everything that is closest and most desirable is always so far away from us. Sterne taught me to understand and endure this.” One of Sterne’s most influential early Soviet advocates was Viktor Shklovsky, a literary critic associated with the experimental literary criticism of the 1920s. In a 1921 pamphlet devoted to Tristram Shandy, Shklovsky presented Sterne as a ‘radical revolutionary of form’ whose digressive prose anticipated the poetry of the Russian Futurists and paintings by Picasso. Sterne’s Soviet afterlife, however, was not confined to the avant-garde circles. By the 1930s, as official discourse turned against modernism, Sterne continued to be read, but attention shifted from questions of form to philosophical and psychological concerns. Despite this change, one association remained constant. Sterne was repeatedly linked, whether approvingly or critically, with artistic and inner freedom. The book takes Sterne as a point of entry into the everyday intellectual life of Soviet translators, critics, and readers. The circulation of works by the ‘freest writer of all times’ (as Friedrich Nietzsche once called Sterne) an author with no obvious utility for the Soviet state, allows the reconstruction of a form of intellectual life that existed alongside, and partly outside, the enforced unanimity of Stalinist culture. Readers turned to Sterne for many reasons. In 1937, the celebrated Soviet writer Isaac Babel and his wife, Antonina Pirozhkova, consulted A Sentimental Journey while searching for a name for their newborn daughter. Among those drawn to Sterne in the 1930s was Gustav Shpet, one of Russia’s leading philosophers before the Revolution. Excluded from academic philosophy under Soviet rule, Shpet turned to literary translation as a means of both economic and intellectual subsistence. In his notes to an unfinished translation of Tristram Shandy, he read Sterne as a belated Renaissance humanist, an author who sought distance from his own times by immersing himself in older comic traditions. Shpet’s fate, however, underscores the limits of such refuge. Arrested during the Great Terror, he was executed in 1937. The book follows figures from very different backgrounds. One of them is the Ukrainian critic Stepan Babookh. Before becoming a literary editor, most notably one of the editors of the 1935 Russian edition of A Sentimental Journey, he had been a worker, soldier and Bolshevik activist. Babookh discovered English literature while being held as a POW by the British during the war, first in an internment camp in India and later in a London prison. A self-taught intellectual of the new Soviet generation, he chose to abandon a Party career in order to become a scholar of English literature. In the late 1930s, Izrail Vertsman, a scholar of Marxist aesthetics, defended the first Soviet doctoral dissertation devoted to Sterne. Vertsman belonged to a group of critics known as “the Current”, led by philosophers Mikhail Lifshitz and Georg Lukács. These intellectuals advocated more sophisticated forms of Marxist criticism, opposing the crude (in their view) sociological approaches of the 1920s. For Vertsman, Sterne embodied the spirit of creative renewal he associated with “the Current”, yet his private letters reveal the difficulty of reconciling his deep admiration of Sterne with the intellectual constraints of the Stalinist 1930s. Through these intertwined lives, the book reconstructs what it calls the secret order of Shandeans—an imagined community of readers ranging from literary scholars, translators, and high school students to soldiers and Gulag prisoners. For many of them, Sterne’s humour offered an imaginary escape at a time of political uncertainty and mounting restrictions on creative freedom, when public expressions of individuality were becoming increasingly dangerous. Featured image by Alexander Popadin via Pexels. OUPblog - Academic insights for the thinking world.

Mary Kingsbury Simkhovitch’s fight for affordable housing [timeline] Mary Kingsbury Simkhovitch—featured as a “Wonder Woman of History” in a series produced by DC Comics—was a key figure in America’s settlement house movement. Throughout the early twentieth century, she spearheaded efforts to improve living conditions for immigrants and the disadvantaged in American cities. Her lifelong advocacy for public housing and urban reform remains urgently relevant almost seventy-five years after her death. Discover Mary K. Simkhovitch’s extraordinary legacy with our interactive timeline below. Featured image provided by Betty Boyd Caroli. OUPblog - Academic insights for the thinking world.

Centuries strong: Black history told through 10 essential Oxford Reads African American history does not begin with the founding of the United States—its roots stretch centuries deep. Black experiences, intellectual traditions, resistance, and cultural innovation have shaped the story of America. This timeline brings together Oxford works that illuminate pivotal moments across over two hundred transformative years—from a Pulitzer Prize–winning biography of Harriet Tubman to long-overlooked accounts from the later Civil Rights era. Explore the essential role of historically Black colleges and universities, and encounter richly drawn portraits of trailblazers like Louis Armstrong and Althea Gibson. Taken together, these books reveal a legacy of resilience, creativity, and influence that has defined American life from the colonial era through the 20th century. Explore the depth and breadth of African American history with this curated selection of Oxford University Press titles—stories that predate 1776 and continue to shape the nation we know today. Featured image by Joel Filipe via Unsplash. OUPblog - Academic insights for the thinking world.

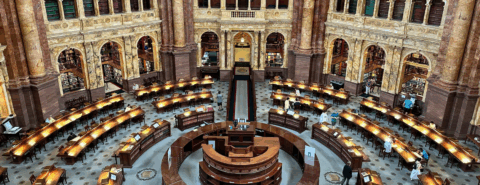

Reintroducing Justice Robert Jackson In 1952, Justice Robert Jackson issued a concurring opinion in the case of Youngstown Sheet & Tube Co. v. Sawyer, in which a majority of the Supreme Court held that President Harry Truman could not invoke executive power to seize several of the major U.S. steel manufacturing companies in order to prevent a nation-wide steel strike that the Truman administration claimed would disrupt the participation of the United States in the Korean war. Jackson’s opinion in Youngstown sketched a framework for executive power under the Constitution, identifying three examples of executive decisions against the backdrop of congressional authority. He set forth a continuum of executive power, ranging from instances in which executive decisions were “conclusive and preclusive” of the authority of other branches, to ones in which Congress and the executive shared powers and the branches operated in a “twilight zone” of concurrent authority, to ones in which an executive decision was in contradiction to a congressional effort to restrain it. When Jackson’s opinion appeared it garnered some appreciative commentary in academic circles but did not otherwise attract much attention. Jackson’s Youngstown concurrence was revived, however, in two memorable opinions in American constitutional law and politics. The first was United States v. Nixon, in which Chief Justice Burger quoted a statement by Jackson that the dispersion of powers among the branches of government by the Constitution was designed to ensure a “workable government.” Burger concluded that allowing President Nixon to assert executive privilege against a subpoena in a criminal proceeding merely on the basis of a “general interest in confidentiality” would gravely interfere with the function of the courts and render the government “unworkable.” The second was Trump v. United States, in which Jackson’s statement in Youngstown that in some instances the president’s power to make executive decisions was “conclusive and preclusive” was used by Chief Justice Roberts to show that granting presidents absolute immunity for their official acts was necessary to enable them to execute their duties fearlessly and fairly. More than seventy years after Jackson issued it, his Youngstown concurrence remains the most authoritative statement of the scope of executive power under the Constitution. But what of the justice who issued that opinion? Robert Jackson was arguably one of the most influential persons in the mid twentieth-century legal profession and a unique figure in American legal history. Yet today he is not widely known and has in some respects been misunderstood. Despite his having one of the largest collections of private papers in the Library of Congress, there has been comparatively little scholarship or popular writing devoted to Jackson. It is time to reintroduce him. Jackson was the last Supreme Court justice to have entered the legal profession by “reading for the law,” a process where people apprenticed themselves to law offices prior to taking a bar examination. He would eventually study law for one year at Albany Law School and receive a degree, but he never attended college. His family were dairy farmers in western Pennsylvania and New York, and he was the first in his family to pursue a legal career. By 1934 he had become one of the more successful lawyers and wealthy residents in Jamestown, New York. That year Jackson was approached by members of the Franklin Roosevelt administration and recruited to join the Bureau of Internal Revenue, even though his practice had not included tax law. From that position he progressed rapidly through New Deal agencies, becoming Solicitor General of the United States in 1938 and Attorney General in 1940. By that year he was on the short list for Supreme Court appointments, and was nominated to the Court by Roosevelt in 1941. Jackson seemingly had every quality necessary to be an influential Supreme Court justice, possessing exceptional analytical and forensic skills and being a gifted writer. But he ended up somewhat unfulfilled on the Court, chafing about its isolation from foreign affairs during World War II and having fractious relationships with some of his fellow justices, notably Hugo Black and William O. Douglas. In the spring of 1945, he was offered the position of chief counsel at the forthcoming Nuremberg trials and took leave from the Court, uncertain about whether he would return. Jackson was largely responsible for the format of the trials, and although he had numerous difficulties with representatives of the other allied powers prosecuting Nazi war criminals, especially those from the Soviet Union, he said in his memoirs that he regarded his time at Nuremberg as the high point of his experience. Jackson’s two years at Nuremberg were also a time in which he began an amorous relationship with his secretary, Elsie Douglas, to whom he would eventually leave his extensive private papers in his will. Douglas continued as his secretary when Jackson returned to the Court after Nuremberg, and when Jackson suddenly died of a heart attack in October 1954, it was in Elsie Douglas’ apartment. After Jackson’s return to the Court in 1946 his relations with colleagues improved, and his last major participation in a Court case came with Brown v. Board of Education in the 1952 and 1953 terms, in which Jackson, through writing successive memos to himself, eventually joined the Court’s unanimous opinion invalidating racial segregation in the public schools. Jackson had a heart attack in March 1954 and only returned to the Court on the day the Brown case was handed down. He then sought to recover over the summer of 1954, only to succumb that October. All in all, a memorable life and career and a fascinating, complicated personality, whose remarkable talents somehow did not quite suit him for the role of a Supreme Court justice. Featured image by Abdullah Guc via Unsplash. OUPblog - Academic insights for the thinking world.

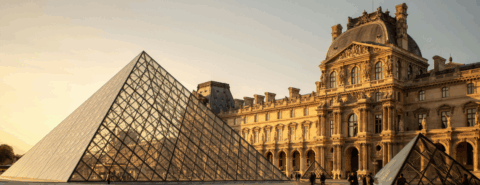

Quatremère de Quincy: the founding father of museophobia? Antoine-Chrysostôme Quatremère de Quincy (1755-1849) was celebrated during his lifetime as the greatest European writer on the arts. The architect Sir John Soane admired his essay on Egyptian architecture while Hegel considered his research on ancient Greek polychromatic sculpture a masterpiece. Despite the breadth of Quatremère’s writings, today he is famous for inventing an idea that he never embraced: namely, that art should remain in its original context because displacing it in museums changed its meaning and neutered its power. While researching my biography of Quatremère, I discovered that his attitude towards museums was surprisingly positive. The years that he spent in Italy during his twenties shaped his outlook. The sight of antiquities excavated from Herculaneum in the nearby royal palace at Portici led him to propose site museums ‘like those in Italy’ once he returned home. Why not reuse the ancient baths in Paris and the amphitheatre in Nîmes as museums of Gallo-Roman antiquities, he asked? In Italy, Quatremère also studied in large museums. He found that the papacy’s ‘sumptuous galleries’ in Rome revalorized pagan artefacts as objects of beauty and knowledge. Indeed, the city itself, he later remarked, was a museal microcosm of world geography and history. Far from opposing the displacement of artworks from their original contexts, he remarked how artefacts imported from afar during antiquity had been resurrected in Rome when they took on new meanings and roles. For instance, he found that the Egyptian figures (now believed to be Roman telamons) greeting visitors to the Museo Pio-Clementino had a purpose no less authentic than their original one: ‘by making them support the corniche that decorated the magnificent entrance’, Pius VI had ‘returned them to their first destination’. Quatremère returned from Italy convinced that it was necessary to centralize artworks in capital museums. He therefore joined the chorus of support for a national museum in the Louvre. Despite his antipathy towards the Revolution, in 1791 he proposed transforming the palace into a ‘temple of knowledge’. After the museum finally opened in August 1793, its administrators relied upon his expertise. For instance, in 1797, he examined thousands of paintings to determine what to share with the Special Museum of the French School in Versailles. Despite previously opposing the spoliation of Italy to enrich the Louvre, in 1807 he applauded Napoleon for amassing ‘treasures of genius from all centuries’. During the Bourbon Restoration, he defended the Louvre with greater zeal because its fortunes aligned with his royalist politics. He criticized but failed to prevent ‘dishonourable’ demands to return seized artworks to their original countries. He penned a memorandum about rehanging what remained and recommended acquiring replacement antiquities such as a Parthenon metope from Choiseul-Gouffier’s collection. In 1821, he boasted that the newly imported Venus de Milo was ‘the rarest and most valuable item in our Museum’. Quatremère’s pride in the Louvre did not prevent him supporting capital museums elsewhere. He was delighted, for example, to inspect the Parthenon sculptures in the British Museum. Lord Elgin saved these antiquities from the ‘barbarian’ Ottomans, he opined, and their ‘handsome arrangement’ in London was ‘even better than on the Parthenon itself’. During his youthful Italian travels, Quatremère also admired energetic and enlightened private collectors such as Stefano Borgia and Ignazio Biscari. Their example taught him that private collecting benefited everyone, even if some avaricious collectors ‘amassed for the sake of amassing’. In France, he therefore praised Grivaud de la Vincelle, whose ‘patriotic’ efforts helped mitigate the absence of a national museum of antiquities, and Léon Dufourny, whose well-ordered and accessible collection served ‘public utility’. During the second half of Quatremère’s life, he created a sizable collection of his own in his mansion on the Rue de Condé, Paris: at his death, he owned around 3,000 printed volumes, numerous modern artworks, ancient Greek vases, Egyptian figurines, ex-votos seized from the temples of Asclepius and Hygeia, and small figures excavated in Italy. Far from being a museophobe, then, Quatremère enthused about site museums, capital museums, and private collections alike. His published writings provide direct and indirect explanations for why he considered museums indispensable. For Quatremère, the future of art depended upon museums because artists must study the ‘corpus of lessons and models’ from antiquity and the modern revival. If ancient Greek artists had perfected art without collections, he theorized that the moderns could never recover the causes of ancient greatness and must therefore turn to museums: ‘In the current state of things, God forbid that artists should be deprived of their assistance!’ Artists could only improve, moreover, if their judges understood beauty, which required a mental ‘ladder of comparison’ that one must calibrate carefully through studying many artworks. Museums were also integral to the advancement of knowledge. During the eighteenth century, he reflected, the enrichment of museums enabled the ‘spirit of observation’ to triumph over the ‘spirit of system’. Since the consolidation of scattered artefacts into major collections finally enabled objects to ‘illuminate and explain one another’, he predicted that future scholars would discern new connections, decode patterns, improve taxonomies, and identify fakes. In Quatremère’s mind,good museums therefore facilitated scholarly efforts to preserve and interpret authentic vestiges of the past whereas bad museums undermined this endeavour. He railed at the Museum of French Monuments precisely because he believed that its director, Alexandre Lenoir, presided over a ‘workshop of demolition’ that creatively restored medieval sculptures to illustrate period rooms. Lenoir’s superficial decorative needs and anachronistic assumptions discoloured the past and offended Quatremère’s sense of art and politics. For Quatremère, respect for past mentalities via authentic reminders of the past was the ultimate antidote to presentist dogmatism: ‘only the history of peoples, monuments, and the arts of antiquity’, he observed, ‘can expand the philosopher’s horizon and transform into a complete theory the fleeting observations that the brevity of human life otherwise condemns us to make.’ Featured image by Edoardo Bortoli on Unsplash. OUPblog - Academic insights for the thinking world.

|

|