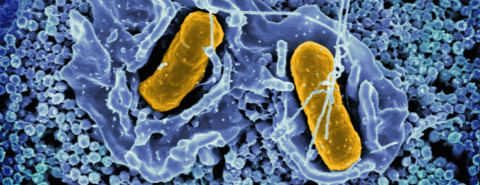

The truth about the microbiome: what’s real and what isn’t? What’s really happening with those microbes inside us? Are we really superorganisms or is it all hype? Dr Berenice Langdon reveals the truth about the microbiome. Does ‘microbiome’ mean our friendly gut bacteria?Yes, sort of. Many people are aware that the term ‘microbiome’ refers to ‘friendly gut bacteria’. But ‘microbiome’ also refers to all the microbes or germs inside us. These are mainly bacteria – but they also include fungi, viruses, and many others. The word ‘microbiome’ also refers to where these microbes are: the ‘biome’ part of the word. They couldbe in our gut, or on our skin but a microbiome can also refer to much bigger locations outside the body: the microbiome of a forest, even an ocean. And going back to the human gut; are these microbes friendly? Well, some are and some aren’t. Like all best buddies, sometimes even the ‘friendly’ ones can be awkward sometimes. Is it true that our microbiome helps protect us from infections?We know that if we take antibiotics, they can reduce our gut microbiome, and we can get a diarrhoea infection moving into our gut. On the other hand, we know that the microbiome is mainly made up of bacteria, and bacteria often cause infections. So does our microbiome protect us from infections, or does it cause infections? The answer is a little bit of both. Our gut microbiome is usually made up of benign bacteria, the sort that don’t cause us harm. These benign bacteria keep the ‘baddy bacteria’, the pathogens, out of the gut. They do this either by outcompeting the bad bacteria, or by making the gut a bit too acidic for the bad bacteria to grow. In this way we can see that the gut microbiome is helping us, just a bit, to avoid gastrointestinal infections. On the other hand, if our ‘friendly’ gut bacteria happen to get out of our guts and into the wrong place—like our blood stream or our brain—even though these bacteria are generally benign and friendly, they can cause a very serious infection. Is it true that probiotics are live microorganisms that improve our health?We know that probiotics are live microorganisms. This is part of their definition, and the idea is that taking them is meant to improve our health in some way. It’s the ‘improve our health’ part that’s difficult to prove. Scientists have been testing probiotics for decades to determine if they have an effect on our health. They’ve tested their effect on all sorts of medical conditions, including constipation, diarrhoea, ulcerative colitis, and irritable bowel syndrome, as well as other non-gut related conditions such as Parkinson’s and autism. So far, however, there’s no evidence to show that probiotics help any of these conditions. The American Gastroenterological Society mainly does not recommend taking probiotics except as part of a research trial. Many probiotics currently on the market contain bacteria that are found in our food anyway (in yoghurt, for example), or in fact, are already inside us. Some probiotic packaging even says so itself: contains live microorganisms that naturally exist in the body. If probiotics don’t do much, are they at least safe? The answer for most people is: yes, probably. The bacteria that make up probiotics are usually fairly benign and don’t usually try and attack us. But for people who are very ill or in intensive care, probiotics are not recommended. Research shows that probiotics can translocate from the gut to the blood stream. Once in the wrong place—just like the microbes in our gut microbiome—probiotics can cause life threatening infections or even death. Is it true that a microbiome is essential for survival?Amazingly, the microbiome is not essential for survival for all sorts of animals including rats, mice, guinea pigs, chickens, flies, and even fish. All of these creatures have been successfully raised without a microbiome. Even more amazingly, this isn’t new. Scientists have been doing this for over a hundred years. It’s absolutely possible for certain animals to survive just fine without a microbiome, and even have babies. This is a fascinating field of research, and these animals are sometimes known as gnotobiotic animals or germ-free animals. However, it is true that herbivores can’t survive without a microbiome. They are dependent on gut microbes to help them ferment grass or foliage and extract the necessary calories. Herbivores really couldn’t survive without a microbiome. Is it true that fermented foods and drinks are healthy?We know that not all fermented foods and drinks are healthy and interestingly, not all fermented foods and drinks have microbes in their final product. Alcohol is an obvious example of this; a fermented drink with known health risks and also one in which the final product contains no microbes whatsoever. Other popular fermented products such as soy sauce are full of salt and are also clearly not universally healthy, while the acid contained in the very popular fermented product cider vinegar can dissolve our teeth and is a known cause of oesophagitis. However, we still love fermented foods. Fermentation often makes foods taste great and helps us preserve our food. So, while there are certain benefits to fermented food and drink in terms of food production and preservation, overall fermentation doesn’t automatically make foods healthy. Is it true that we need to pay attention to our diets to improve our microbiomes?We should of course pay attention to our diet, by not eating too much, having a varied diet and including plenty of fibre, as this is the route to good health. But from a microbiome point of view, the bacteria in our guts don’t need much help. Our colon typically contains a quadrillion bacteria per ml or 1,000,000,000,000 – a mind-blowingly large number. We also have a wide variety of bacteria inside us, constantly changing minute by minute. We obtain these effortlessly from the bacteria that coat the outside of our foods – even those foods we think of as ‘clean’ like bread and fruit as well as the bacteria naturally found within certain fermented foods mentioned above. A wide variety of bacteria in our gut is regarded by some as a mark of health and is easily achieved by eating a wide variety of foods and by daily contact with each other, with the outside world, and with nature. Is it true that together with our microbiomes we are superorganisms?No, this is not true. Together with our microbiomes we are not superorganisms. While microbes do help us a bit—helping us digest a little bit more food, avoiding certain infections—they also cause us a lot of work, as we have to protect ourselves from them and avoid infections. It is not a universally positive relationship. But ultimately, we are not superorganisms simply because we do not evolve as one unit. Microbes evolve inside us at a vastly faster rate than we do. And we evolve slowly, evolving protective mechanisms against the microbes, but making use of them when we can. Featured image by the National Institute of Allergy and Infectious Diseases via Unsplash. OUPblog - Academic insights for the thinking world.

Rethinking nuclear As someone who has spent decades studying the evolution of nuclear energy, I’ve seen its emergence as a promising transformative technology, its stagnation as a consequence of dramatic accidents and its current re-emergence as a potential solution to the challenges of global warming. While the issues of global warming and sustainable energy strategies are among the most consequential in today’s society, it is difficult to find objective sources that elucidate these topics. Discourse on this subject is often positioned at one or another polemical extreme. Further complicating the flow of objective information is the involvement of advocates of vested interests as seen in the lobbying efforts of the coal, gas and oil industries. My goal has been to present nuclear energy’s potential role in a sustainable energy future—alongside renewables like wind and solar—without ideological baggage. An additional hurdle that must be overcome in dealing with the pros and cons of nuclear energy is the psychological context in which fear of nuclear weapons and of radiation impedes rational analysis. The deep antipathy to nuclear phenomena is illustrated by what might be called the “Godzilla Complex” that developed after the crew of the Japanese fishing boat, the Lucky Dragon 5, was exposed to heavy radiation from a nuclear weapons test in 1954. Godzilla was conceived as a monster that emerged from the depths of the ocean due to radiation exposure. It has become an enduring concept that has been portrayed in nearly forty films in the United States and Japan and in numerous video games, novels, comic books and television shows. It is not surprising that fear of nuclear reactor radiation has been widespread. In spite of the fact that there are no documented deaths due to nuclear reactor waste (in contrast to deaths from accidents), it is widely assumed that nuclear reactor waste is quite dangerous. In contrast, the fact that premature deaths attributable to the fossil-fuel component of air pollution worldwide exceeds more than 5 million annually generates little concern. Similarly, the total waste produced from nuclear energy can be stored on one acre in a building 50 feet high, whereas for every tonne of coal that is mined, 880 pounds of waste material remain. Furthermore, this waste contains toxic components. Yet public concern for nuclear waste clearly overshadows that for coal, despite these contrasting impacts. After an in-depth review of the most significant nuclear accidents and recognition of the deep psychological antipathy to nuclear energy, I’ve become increasingly interested in the emergence of an international effort to develop safe, cost-effective nuclear energy known as the Generation IV Nuclear Initiative. This began in 2000 with nine participating countries and has since grown substantially. In the early years, the Generation IV Nuclear Initiative took a systematic approach to identify reactor designs that could meet demanding criteria—including the key characteristic of being “fail safe”. Rather than depending upon add-on safety apparatus, “fail safe” designs rely on the laws of nature—such as gravity and fluid flow—to provide cooling in the event that the reactor overheats. Another high priority design feature is modular construction, allowing multiple units to be constructed in a timely and economical fashion. After reviewing dozens of options, the Generation IV Nuclear Initiative settled on six designs that it found to be the most attainable and desirable. Since its initial efforts, countries that have embraced the goals of the Generation IV Nuclear Initiative have been pursuing additional designs including reactors that range in size from quite small to about one third the size of the typical one megawatt reactor. In my book, I’ve focused my attention on four promising designs. These four designs eschew the vulnerabilities of using water as a coolant that proved so devastating at Chernobyl and Fukushima. The explosion at Chernobyl was due to steam and the three explosions at Fukushima were due to hydrogen gas that resulted from oxidation of fuel rods by overheated water. These were not nuclear explosions. Instead, the four designs I’ve highlighted use liquid sodium, liquid lead, molten salts and helium gas as coolants. Liquid sodium and liquid lead cooled reactors are operating successfully in Russia, while China incorporated a gas cooled reactor into its grid in 2023. In the United States, Kairos Power is constructing a molten salt cooled reactor, while the TerraPower company (founded by Bill Gates) has broken ground on construction of a sodium cooled reactor in Kemmerer, Wyoming. These are intended to be models for replacing coal fired power plants with Generation IV nuclear plants. Multiple implementations of this approach are planned through the early 2030s. Given the world-wide interest in Generation IV reactor development and the many initiatives that are being pursued, it is likely that at least some of these projects will come to fruition in the near future. While success is not guaranteed, there is clearly a need for the general public and students to be kept informed of progress leading up to 2030 and beyond. To help bridge the knowledge gap in this rapidly evolving domain, I’ve launched a newsletter on Substack called “Nuclear Tomorrow.” It’s written for anyone concerned with the intersection of public policy, energy generation, and its impact on global warming. I hope it serves as a resource for those seeking clarity in a complex and consequential field. Feature image: nuclear power plant via Pixabay. OUPblog - Academic insights for the thinking world.

What all parents need to know to support their teens in college With the semester well underway, your college student is probably juggling a lot—classes, homework, exams, and writing assignments—all while managing friendships, jobs, and other responsibilities. This balancing act can be tough for any young adult, but it’s often especially challenging for students with ADHD. In high school, your teen may have benefitted from built-in structure and support systems (e.g., teachers, parents) that helped them stay on track and meet their goals. In college, those supports tend to fade, leaving students to navigate much more on their own. As a parent, you can play an important role in helping your student adjust to these new demands. Sometimes this means offering a little extra “scaffolding”—gentle support and guidance—to help them build the skills they need to thrive on their own. That’s exactly why I wrote Mastering the Transition to College: The Ultimate Guidebook for Parents of Teens with ADHD. It’s packed with practical information and strategies to help you and your teen navigate these years successfully. This blog post offers a first look at some of those tips, so you’ll have tools ready if your student starts to struggle, academically or otherwise, this semester. - Communication and collaboration are key. You probably know from the high school years that giving unsolicited advice to your teen can backfire. Pushing too hard often leads to resistance. Instead, try to use a calm, collaborative tone. Let your teen know you’re there to support and guide them, but that they are in control of their own decisions. Approaching conversations this way helps your teen feel respected and more open to brainstorming solutions with you.

- Set goals. Before you can help your teen make changes, it’s important to first understand what they want. Ask about their goals, not just in academics, but in all areas of their life that matter to them. Once you know their priorities, you can work together to map out what steps are needed to get there. This also makes it easier to guide them without feeling like you’re imposing. Some of these steps may be addressed in the tips below.

- Help your teen establish an organizational system. This may sound obvious, but it’s incredibly powerful: having a clear system to track tasks and deadlines is a game changer. Encourage your teen to choose a system that works for them. It could be a paper planner, a phone app, or a calendar on their laptop. The key is sustainability, so expect some trial and error as they experiment. Whatever they choose, the idea is that the system should be sustainable. The goal is to help them feel in control of their time, not overwhelmed by it.

- Encourage your teen to develop a system for completing tasks. College life means that the to-do list is rarely empty. Your teen may feel as if their tasks are never-ending… as one is completed, another is added to the list. Therefore, developing a method for triaging what needs to get completed and by when will be crucial. An approach that balances what is important vs. what is urgent is often a good place to start.

- Discuss all available campus resources with your teen. College campuses offer a lot of support to help your teen succeed. However, students (and parents) often find it difficult to know what resources are available and how to access them. Resources may be academic in nature (e.g., tutoring, office hours, advising, academic accommodations, writing center), mental health related (e.g., student health center, counseling center, skills groups), or logistical (e.g., career services, resident assistants). Knowing what resources to use, when, and how to access them will be essential for ensuring a successful college career. Further, if your teen needs more support than your conversations with them or my book can provide, finding a licensed professional may be a helpful next step. Outside help can be an important part of your teen achieving success.

I hope these tips provide you with a solid starting point in supporting your teen with the transition to and through college. For even more guidance and detailed advice as to how to implement these strategies, check out my book Mastering the Transition to College: The Ultimate Guidebook for Parents of Teens with ADHD. Feature image: photo by Joshua Hoehne via Unsplash. OUPblog - Academic insights for the thinking world.

Open Access Week: Nothing about me, without me In a 2011 speech about shared decision making in healthcare, the UK Secretary of State, Andrew Lansley, coined the phrase “nothing about me, without me”. Used at the time to summarise efforts to empower patients in decisions about their care, the phrase has since been borrowed by advocates and activists on a range of social justice topics. This year’s Open Access Week poses the question: “How, in a time of disruption, can communities reassert control over the knowledge they produce?” Here at OUP, we were inspired to delve into our open access publishing for examples of research that doesn’t just study communities, but actively involves them. From shaping research questions to guiding implementation, these projects center the voices and experiences of the people at their heart. This commitment to community-led knowledge creation isn’t limited to the articles themselves. It’s reflected in the editorial policies, peer review practices, and team structures that support our journals—ensuring that open access is not just about availability, but about equity and inclusion in research and publishing processes: From participatory research approaches to elder care, to self-determination paths for trans and gender diverse people, to rural ownership of businesses in areas of high tourism, and citizen empowerment during energy transitions – our open access publishing is full of examples of the benefits of including people in the process of generating knowledge about them. All articles included here are published with an open access license, ensuring peer-reviewed, trusted knowledge and diverse voices can reach everyone, anywhere in the world: Diversity in Health InterventionsSelf-determination and self-affirmative paths of trans* and gender diverse people in Portugal: Diverse identities and healthcare by C Moleiro et al, European Journal of Public Health Counting everyone: evidence for inclusive measures of disability in federal surveys by Jean P Hall et al, Health Affairs Scholar Creating inclusive communities for LGBTQ residents and staff in faith-based assisted living communities by Carey Candrian, Innovation in Aging Developing a co-designed, culturally responsive physical activity program for Pasifika communities in Western Sydney, Australia by Oscar Lederman et al, Health Promotion International Co-creating a Mpox Elimination Campaign in the WHO European Region: The Central Role of Affected Communities by Leonardo Palumbo et al, Open Forum Infectious Diseases Participatory development of a community mental wellbeing support package for people affected by skin neglected tropical diseases in the Kasai province, Democratic Republic of Congo by Motto Nganda et al, International Health Inclusive Digital Health StrategiesThe ATIPAN project: a community-based digital health strategy toward UHC by Pia Regina Fatima C Zamora et al, Oxford Open Digital Health From disease specific to universal health coverage in Lesotho: successes and challenges encountered in Lesotho’s digital health journey by Monaheng Maoeng et al, Oxford Open Digital Health Implementing an inclusive digital health ecosystem for healthy aging: a case study on project SingaporeWALK by Edmund Wei Jian Lee PhD et al, JAMIA Open Developing the BornFyne prenatal management system version 2.0: a mixed method community participatory approach to digital health for reproductive maternal health by Miriam Nkangu et al, Oxford Open Digital Health Equitable Energy TransitionsEnergy communities—lessons learnt, challenges, and policy recommendations by L Neij et al, Oxford Open Energy Solar-Powered Community Art Workshops for Energy Justice: New Directions for the Public Humanities by Anne Pasek et al, ISLE: Interdisciplinary Studies in Literature and Environment Community participation and the viability of decentralized renewable energy systems: evidence from a hybrid mini-grid in rural South Africa by Mahali Elizabeth Lesala et al, Oxford Open Energy Quantifying energy transition vulnerability helps more just and inclusive decarbonization by Yifan Shen et al, PNAS Nexus Renewable energy and energy justice in the Middle East: international human rights, environmental and climate change law and policy perspectives by A F M Maniruzzaman et al, The Journal of World Energy Law & Business Protecting Local CulturesEnriching Cultural Heritage Communities: New Tools and Technologies by Alan Dix et al, Interacting with Computers A framework for tourism value chain ownership in rural communities by Michael Chambwe et al, Community Development Journal Local government engagement practices and Indigenous interventions: Learning to listen to Indigenous voices by Christine Helen Elers et al, Human Communication Research The strengths, gender, and place framework: a new tool for assessing community engagement by Justin See et al, Community Development Journal Featured image by Mareike Mgwelo via Pexels. OUPblog - Academic insights for the thinking world.

5 books to master your transition to college [reading list] As the days get cooler and autumn approaches, it’s the perfect time for a fresh start. Back to school is here. Whether your teen is heading off for another year at college or just beginning the transition, we’ve curated a selection of helpful guides to make the journey smoother. These titles are perfect companions for navigating this exciting new chapter. Mastering the Transition to College: The Ultimate Guidebook for Parents of Teens With ADHDSending a teen off to college is a thrilling milestone, but for parents and caregivers of teens with ADHD, it can also bring unique challenges. Mastering the Transition to College is designed to ease those concerns by offering expert advice, practical strategies, and proven tools to help teens thrive both academically and emotionally during this transition. Learn more about Mastering the Transition to College by Michael C. Meinzer College Mental Health 101: A Guide for Students, Parents, and Professionals College Mental Health 101 offers more answers, relief, resources, and research backed information for families, students, and staff already at college or beginning the application process. With simple charts and facts, informal self-assessments, quick tips for students and those who support them, the book includes hundreds of voices addressing common concerns. Learn more about College Mental Health 101 by Christopher Willard, Blaise Aguirre, and Chelsie Green Supporting Your Teen’s Mental Health: Science-Based Parenting Strategies for Repairing Relationships and Helping Young People ThriveTeen mental health issues are rising at an alarming rate, and many families are unsure of how to best help their children. Supporting Your Teen’s Mental Health is an essential resource for parents and caregivers looking to support teenagers who are struggling with mental health concerns. Written in a conversational tone by psychologist and fellow parent Andrea Temkin-Yu, the workbook is a thorough, evidence-based guide to essential parenting strategies that have been proven to help improve relationships and behavior. Learn more about Supporting Your Teen’s Mental Health by Andrea Temkin-Yu If Your Adolescent Has Autism: An Essential Resource for Parents While adolescence can be a tough time for parents and their teens, autistic teenagers may face specific challenges and need targeted support from the adults in their lives. The road ahead can be difficult for parents and caregivers, too, especially because the teenage years can involve surprising changes in their child and in society’s expectations of them. Learn more about If Your Adolescent Has Autism by Emily J. Willingham The Parents’ Guide to Psychological First Aid: Helping Children and Adolescents Cope With Predictable Life Crises Just as parents can expect their children to encounter physical bumps, bruises, and injuries along the road to adulthood, emotional distress is also an unavoidable part of growing up. The sources of this distress range from toddlerhood to young adulthood, from the frustration of toilet training to the uncertainty of leaving home for the first time. Learn more about The Parents’ Guide to Psychological First Aid edited by Gerald P. Koocher, Annette M. La Greca, Olivia Moorehead-Slaughter, and Nadja N. Lopez Check out these books and more on Bookshop and Amazon. Featured image by Tanja Tepavac via Unsplash. OUPblog - Academic insights for the thinking world.

|

|